Member-only story

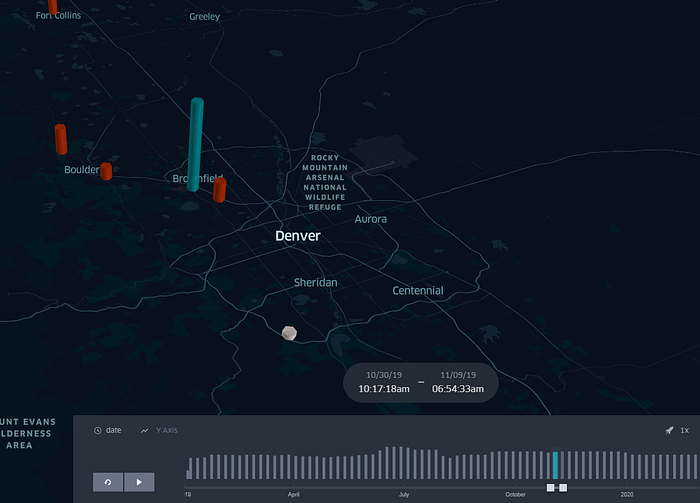

Downloading and Visualizing Denver Bike and Ped Data with Python and Kepler.gl

Hello everyone,

It’s been a while since the last time I published an article. I had a little bit of spare time in between jobs. So, I decided to write a new one that explains how to download publicly available bike data from the city of Denver and visualize it using KeplerGl library for Python.

By the way, I started working as a Lead Traffic Modeler at Ulteig. Please check out the company and if you are in the transportation business, hit me up!

This article has actually become the first part of a series. The reason I started doing all this to check whether there is a difference before and after COVID19 in terms of bike ridership and pedestrian counts. However, the CDOT make bike and pedestrian counts available two months prior to today’s date. Therefore, it only goes up to Feb 29th, 2020. I will definitely revisit this in the future to do that comparison!! Let’s start

First, let’s import the libraries we will use: